Scripting for admins

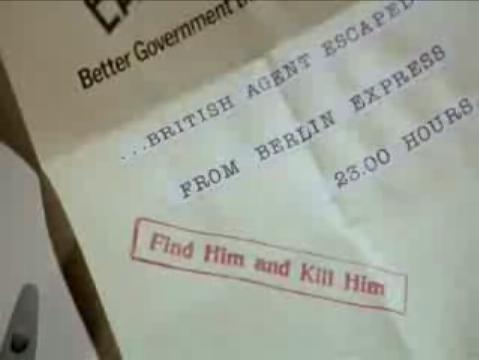

Sometimes admin work seems like being a wizard summoning a demon. Most of the prep time is making sure that the circle is secure and there is no way for the demon to do anything unwanted, constraining him to do just what is asked for and nothing else.

I liked this script, my co-workers liked this script and it was useful. Then an admin used it specifying the username as 'root' -- as you might imagine, this was a problem. I added a run condition test to check that the username specified wasn't root before running. If it was, the script would exit with, "No killing root! Bad Admin! No cookie for you!"

I was recently asked to add a crontab entry to almost 392 AIX systems. Half of the code was all about making sure it was ready to run. Is this host really running AIX? Do we have admin rights? Is the host on the list? ... and before that, "Oh yeah, can we read the list?" Have we already added the crontab line?

A majority of the remainder is me being paranoid and setting variable names to the full path of the executible. (i.e. - TAIL="/usr/bin/tail") Another -- and shorter -- approach is to restrictively set $PATH, but I guess I'm used to this now.

At the very start, I set operation variables, like the list of people to receive email into $MAIL_RCPT ... this makes life so much easier for me or anyone who wants to tweak the script months or years down the line.

Here's the meat of the script:

RND_HOUR=$(($RANDOM%24)) # Generates rnd b/w 0-23

RND_MIN=$(($RANDOM%60)) # Generates rnd b/w 0-59

$CRONTAB -l > $CRON_TMP

$ECHO "${RND_MIN} ${RND_HOUR} * * ${DAY_TO_RUN} \c" >> $CRON_TMP

$ECHO "$PERL -MLWP::Simple -e 'getprint \"${LSSEC_URL}\"' | /bin/sh" >> $CRON_TMP

$TAIL -1 $CRON_TMP | $MAIL -s "$HOST_S crontab appended" $MAIL_RCPT

$CRONTAB $CRON_TMP

$RM $CRON_TMP

That's all the script really does.

What else could go wrong? Here's a few I've come up with since: check the hostname of the URL and make sure nslookup comes back with a result. Check both the location of $CRON_TMP and /var/spool/cron to make sure they aren't full and I will be able to add to the crontab. Maybe even use wget to make sure I can get a useful file from the complete URL -- in this case, it should be perl code.

This sort of contingency planning (the next blog topic for pilots, I think) is a good idea for any programming and the biggest lock you can use to secure your program against misuse. When you're writing a script that will be run as root, used day-in, day-out maybe for years on systems which could affect the work of dozens, hundreds or even thousands of users; it needs to be bullet-proof. Think of everything that could go wrong, and how to prevent it, or at least notice it, stop and yell for help.

We have a high transaction volume system that is frankly too busy to do its own backups. So, we have a second system runing as a hot spare with the same configuration and data that does the backups and can, in a pinch, be used to replace the primary system in a couple minutes if the primary has some flavor of heart attack.

Since system B always keeps current with system A, it will copy over new files and delete files that are gone from A. What if A gets rebuilt or renamed, etc? It would erase all the data on B. I check the ssh key to make sure the system whose data is being cloned is the same one as always. What if the filesystem on A isn't mounted for some reason? We may need that clone on B to restore it, so no erasing it. I keep a sanity file on A ... if that's missing, no clone and an email goes out warning us.

Labels: computers

0 Comments:

Post a Comment

Subscribe to Post Comments [Atom]

<< Home